AI Governance Checklist for Data Privacy Compliance

15 Dec 2025

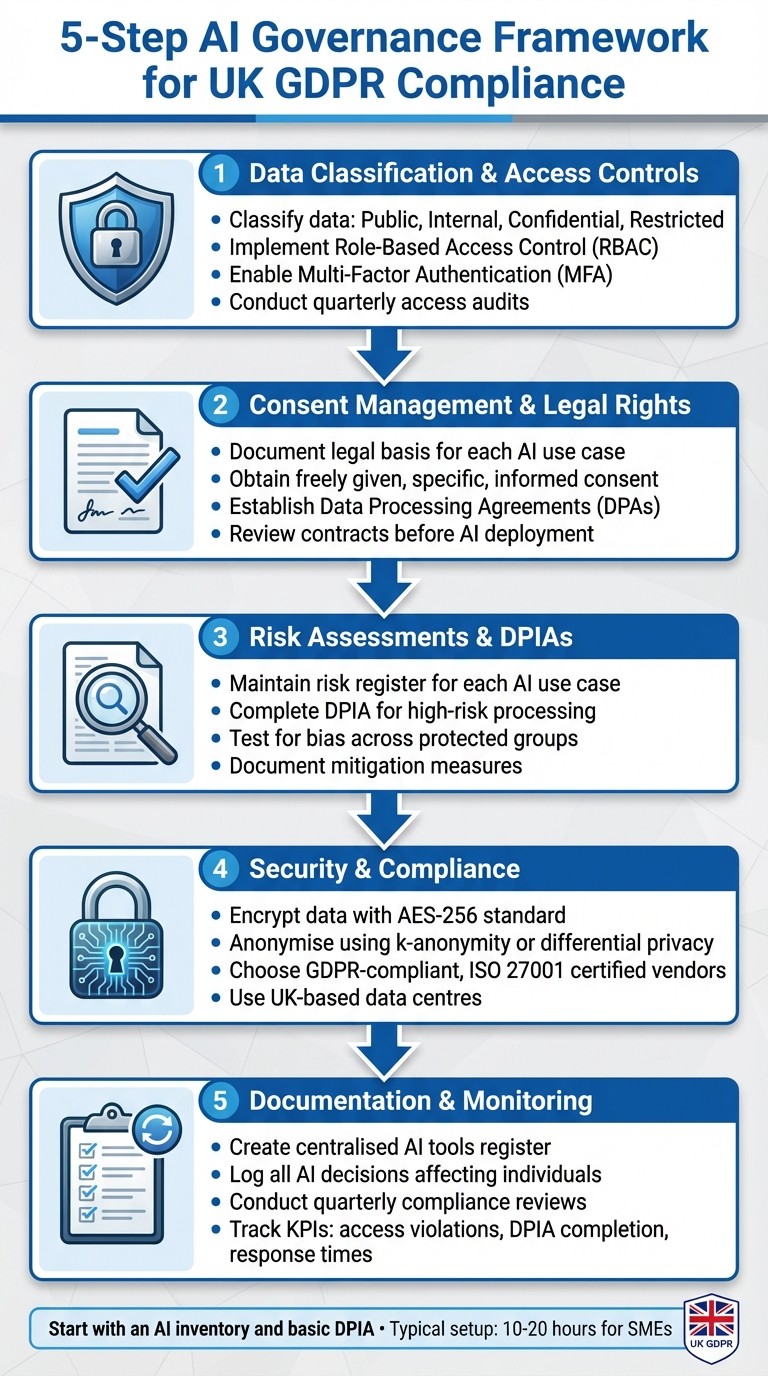

Practical AI governance checklist for UK SMEs to meet GDPR and Data Protection Act requirements: data classification, DPIAs, vendor checks, bias testing and encryption.

AI governance ensures your systems comply with data privacy laws like the UK GDPR and Data Protection Act 2018. Without proper controls, businesses risk fines, reputational damage, and operational disruptions. Here’s a quick breakdown of what you need to know:

Key Risks: Shadow AI (unapproved tools), improper data use, and weak security measures can lead to breaches.

Core Actions:

Classify data (e.g., public, restricted) and limit access with RBAC and MFA.

Ensure lawful data processing with clear legal bases and consent management.

Assess risks using Data Protection Impact Assessments (DPIAs) and bias testing.

Secure data with encryption, anonymisation, and access monitoring.

Evaluate AI vendors for compliance (GDPR, ISO 27001, UK data residency).

Ongoing Steps: Keep records of AI tools, decisions, and risks. Regularly review access controls, logs, and compliance metrics.

Start by creating an inventory of your AI tools and conducting a basic DPIA. Tools like GuidanceAI can simplify compliance for UK SMEs by providing tailored governance support.

5-Step AI Governance Framework for UK GDPR Compliance

Data Privacy Controls for AI Systems

Data Classification and Access Controls

Organising your data into categories like public, internal, confidential, and restricted is a critical first step. For instance, sensitive information such as client financial records, personal details, or special category data (e.g., health or biometric data) should be classified as restricted. Once your data is sorted, establish clear rules for what can and cannot be shared with AI tools. A straightforward guideline could be: "Do not input special category data, client financial information, or trade secrets into public AI platforms like ChatGPT."

To further safeguard your data, apply role-based access control (RBAC). This limits access to information based on an employee’s specific role, ensuring they only interact with data relevant to their responsibilities. This is particularly crucial for AI tools like Microsoft Copilot, which can access vast amounts of internal data and might expose gaps in permissions on a large scale. Strengthen security by enabling multi-factor authentication (MFA) on systems linked to AI and conducting regular reviews - such as quarterly audits - of access permissions. This helps to remove outdated entries and reduce excessive access within groups. Regular audits are a straightforward way to lower risks.

Consent Management and Legal Rights

Once your data is categorised and access is controlled, ensure that all AI-related data processing is based on a clear and lawful rationale. For compliance with UK GDPR, document the legal basis for each AI use case - whether it’s consent, a contractual obligation, legitimate interests, or another lawful ground - and include this in your processing activities register. If consent is required (for instance, when using AI for personalised marketing), it must be freely given, specific, informed, and unambiguous. Keep detailed records of consent, noting the time, date, method, and scope, and make it easy for individuals to withdraw their consent if they choose.

Before using client data with AI tools, review contracts and Data Protection Impact Assessments (DPIAs) to ensure AI-based processing is already permitted. If it isn’t, update the terms or obtain explicit written consent from clients beforehand. For example, an e-commerce SME planning to use AI for personalised analytics should confirm it has the rights to customer data and document all sources in a central inventory. Additionally, establish Data Processing Agreements (DPAs) with AI vendors. These agreements should outline the purpose of processing, data categories, security measures (like encryption and access controls), and procedures for data deletion or return once the contract ends.

Risk Assessments and Bias Mitigation

Privacy Impact Assessments

To ensure compliance with UK GDPR, map each AI system against its requirements to spot potential concerns. Your assessment should highlight risks like unlawful data processing, collecting excessive information, unclear legal bases, ambiguous purposes, or weak security measures. Pay special attention to potential discriminatory outcomes in areas like hiring, credit decisions, or pricing, as these could violate equality standards or damage your organisation’s reputation.

Maintain a risk register for every AI use case, detailing the data categories involved, affected groups, and severity or likelihood scores. Expand existing risk management templates - such as those used for information security or ISO 27001 - by including AI-specific risks and identifying impacted data subjects. Link each use case to principles like lawfulness, transparency, data minimisation, security, and automated decision-making.

Under UK GDPR, a Data Protection Impact Assessment (DPIA) is required when processing is likely to pose a high risk to individuals. Examples include systematic profiling, large-scale monitoring, or processing sensitive data. Use a simple screening checklist with yes/no questions such as:

"Does this AI system profile individuals?"

"Does it make or support decisions with significant effects (e.g., hiring, credit, or access to services)?"

"Does it involve special-category or children's data?"

"Is the processing large-scale or systematic?"

If two or more answers are "yes", complete a DPIA. The DPIA doesn’t need to be lengthy - just document key details like the system’s purpose, lawful basis, data flows, third parties involved, risks (e.g., misclassification, unfair denial of services, or data breaches), and mitigation measures. These measures might include data minimisation, human oversight, clear appeal mechanisms, and encryption.

Once this is in place, follow up with thorough bias testing and measures to ensure decision-making transparency.

Testing for Bias and Explainability

After identifying risks, test your AI system for bias by starting with a data representativeness review. List key fairness attributes like gender, age, region, or disability, and check for imbalances in the dataset. If sensitive attributes aren’t collected, consider using proxies or sampling techniques - such as manual case reviews or postcode-based socio-economic checks - while avoiding unlawful or intrusive profiling.

Conduct stratified performance testing by calculating accuracy or error rates for different groups. Look for disparities in approval, rejection, or error outcomes. For deeper analysis, submit near-identical test cases that vary only by a protected attribute (e.g., postcode, educational background, or employment gap) and compare the results. If significant disparities are found, review the input features, adjust or remove those contributing to bias, retrain the model with more balanced data, and introduce manual reviews for edge cases. Document all adjustments and retest until disparities fall within acceptable limits.

Test the system further with edge cases and adversarial inputs. These might include unusual prompts, attempts to extract confidential information, or instructions designed to bypass internal safeguards. This helps ensure your AI system remains reliable under challenging conditions.

Finally, create clear and concise model cards that outline the AI system’s purpose, data sources, limitations, and fair-use boundaries. For decisions affecting individuals, provide plain-English summaries of the key factors influencing outcomes and explain how users can challenge or appeal decisions if needed. This transparency builds trust and ensures compliance with fairness standards.

Security and Compliance in AI Tools

When integrating AI into your business, safeguarding data and adhering to compliance standards is non-negotiable. Robust security measures and privacy protocols are essential to protect sensitive information and minimise risks.

Data Encryption and Anonymisation

Encrypt sensitive data before processing it with AI systems. Use proven encryption standards like AES-256 to secure data both at rest and during transit. For UK-based SMEs, cloud providers such as Microsoft Azure and AWS offer automatic encryption and UK data residency options, ensuring compliance with GDPR from data collection through to processing.

Anonymise personal data wherever feasible by applying techniques like k-anonymity, differential privacy, or tokenisation. These methods strip away or mask identifiable information, reducing the risk of re-identification. For example, an e-commerce SME could anonymise customer purchase data when deploying an AI-powered recommendation engine, maintaining privacy while extracting actionable insights. Tools such as ARX (open-source) or Google Cloud DLP make anonymisation accessible, even for smaller organisations.

Integrate AI systems with existing access control protocols to maintain consistent security measures. Monitor access logs specific to AI activities to spot anomalies, unauthorised access, or unusual data extraction patterns. Regularly reviewing these logs not only helps detect potential breaches early but also ensures an audit trail for compliance.

Once data is secure and anonymised, the next step is to choose AI solutions that are designed to meet strict privacy and compliance standards.

Choosing Privacy-Compliant AI Systems

After implementing encryption and anonymisation, it’s critical to evaluate AI tools with privacy and compliance in mind. Assess AI vendors using a detailed checklist. Look for solutions that:

Are GDPR-compliant and certified under standards like ISO 27001 or Cyber Essentials.

Use UK-based data centres to ensure data sovereignty.

Provide transparent data processing agreements (DPAs), audit logs, and user opt-out options.

Pay attention to details such as where data is stored, encryption practices, retention policies, and whether sub-processors are involved. Confirm that AI models do not retain or misuse customer data.

Avoid consumer-grade AI tools for handling confidential or proprietary information. Many of these tools reserve the right to use user prompts for model training and may store data outside the UK or EU. Instead, opt for enterprise-level solutions that offer advanced privacy controls, such as contractual DPAs, adjustable data retention policies, and robust administrative settings. Define clear categories for "safe" and "restricted" data inputs, and implement a shadow AI policy to guide employees on approved and prohibited tools, complete with practical examples.

Documentation and Continuous Monitoring

Once you've implemented security protocols, the next step is to maintain thorough records and establish regular review cycles. This ensures your AI systems remain compliant with evolving regulations and align with your business's growth.

Documenting AI Processes and Decisions

Start by creating a centralised register for all AI tools. Include details like the tool's purpose, the types of data it processes, the legal basis for its use, the designated owner, and its risk level. This register will act as your go-to reference for audits or regulatory inquiries.

For each tool, document key elements such as training data sources, preprocessing methods, configuration settings, access controls, retention policies, and the extent of human oversight. When AI systems make decisions that directly impact individuals - like credit approvals or hiring recommendations - log essential details. This should include timestamps, the responsible user or system, data source references, and notes explaining the rationale behind the decision. These records will help you respond confidently to complaints or data subject access requests.

For high-impact use cases, draft clear explanations of how decisions are made. Outline the inputs the system uses, provide a general overview of the decision-making process, and specify where human review is involved. This not only supports UK GDPR’s transparency requirements but also addresses the "right to explanation." Keep centralised logs that detail tool usage, data categories, and decision rationales. For instance, access logs for tools like Microsoft Copilot or internal AI assistants should be regularly exported and securely stored as part of your broader security logging practices.

To streamline this process, store all records in a single, access-controlled repository - such as a "Privacy & AI Governance" workspace. This makes it easier to respond promptly to external audits or regulator inquiries. Using one-page templates for tasks like DPIAs, AI use-case definitions, and vendor assessments can help you maintain consistent documentation without adding unnecessary administrative work. For smaller businesses, setting up privacy governance documentation can typically be done in 10–20 hours using free resources.

These records will serve as the foundation for ongoing performance and compliance reviews.

Regular Monitoring and Updates

With detailed records in place, shift your focus to continuous monitoring. Conduct quarterly reviews and perform additional checks after major system changes or incidents. These reviews should ensure your AI system register and ROPAs are up to date, access controls remain secure, and vendor agreements are current. Verify that logs are complete and retrievable, AI usage aligns with documented purposes, and staff follow data classification policies.

Pay close attention to privacy and security indicators like access violations, failed login attempts, unusual data exports, and any breaches or near-misses involving AI tools. For higher-risk models, track metrics such as accuracy, error rates, false positives/negatives, and fairness indicators like disparate impact ratios across protected groups. Compliance-focused KPIs might include how quickly DPIAs are completed, response times to data subject access requests involving AI, resolution of audit findings, and staff training completion rates.

Treat any substantial changes to an AI system - such as new data sources, retraining, architecture updates, or new use cases - as formal change requests requiring a risk review. Before deploying updates, revise the AI system register and user guidance, then run regression tests to confirm that privacy controls and system performance remain intact. Keep a version history that logs model versions, deployment dates, key changes, and sign-offs to ensure outputs can always be traced back to the specific version in use.

Finally, integrate AI into your existing data breach and incident response plans. Include AI tools and data flows in impact assessment templates, and use centralised logging and alerts to flag unusual AI activity. Set up clear internal channels - such as a dedicated email or form - for staff to report suspected AI misuse or data leaks. Document all incidents, investigations, and corrective actions to demonstrate compliance effectively.

Using GuidanceAI for Scalable AI Governance

For many small and medium-sized enterprises (SMEs), the issue isn't recognising the importance of AI governance - it’s figuring out how to implement it without stretching limited resources. Hiring full-time privacy officers or dedicated compliance teams often isn’t feasible. That’s where GuidanceAI steps in as a practical solution, bridging the resource gap.

GuidanceAI operates like a virtual C-suite adviser, offering boardroom-level expertise in AI governance. Developed by AgentimiseAI and informed by business experts, it provides support in critical areas like data privacy compliance, risk assessments, and regulatory alignment. Think of these Leadership Agents as digital versions of seasoned executives, delivering insights tailored to your business workflows. Instead of struggling to interpret GDPR requirements or conduct privacy impact assessments on your own, your leadership team gains access to recommendations that fit seamlessly into your operations.

One of the challenges SMEs often face is shadow AI usage - when employees adopt AI tools without consistent oversight. GuidanceAI tackles this by creating customised governance checklists for tasks like consent management, data classification, and vetting third-party tools. For example, if your business uses AI for customer personalisation, GuidanceAI can walk you through privacy impact assessments, suggest encryption methods, and help set retention schedules that align with UK GDPR’s explicit consent rules.

The platform is designed to integrate smoothly into your existing workflows. It supports documentation, access audits, and quarterly reviews, all without requiring complex frameworks. Research analysing over 47 AI governance frameworks highlights data protection as the top priority for avoiding breaches. GuidanceAI ensures ongoing oversight in these critical areas, removing the need for a dedicated ethics team while maintaining robust governance.

Conclusion

Ensuring your AI systems comply with data privacy laws doesn't have to involve a complex, enterprise-level approach. By focusing on a few key actions, you can address common AI-related risks while staying in line with UK data privacy regulations. These actions include: defining roles and approvals, securing data through classification and encryption, embedding consent and user rights, assessing risks and biases, and maintaining ongoing monitoring. For smaller organisations, these steps provide a practical way to manage compliance without unnecessary complications.

To keep things manageable, integrate AI governance into your existing processes, such as data protection inventories, regular management meetings, and compliance reviews. Use straightforward metrics, like tracking privacy incidents involving AI, monitoring error rates in your models, and ensuring staff complete relevant training. These measures give leadership a clear view of progress while avoiding unnecessary strain on your team. They also build directly on the privacy and risk controls discussed earlier.

Strong governance not only mitigates risks associated with unauthorised or poorly managed AI use but also ensures your AI investments drive measurable results at a strategic level. Security measures like encryption, anonymisation, and regular access reviews are essential and should align with recognised standards like ISO 27001 or Cyber Essentials wherever possible.

You don’t need to start from scratch. Tools like GuidanceAI by AgentimiseAI can act as virtual advisers, offering practical checklists and policies tailored to your workflows. Designed for resource-limited, fast-moving SMEs, these platforms provide expert guidance in a flexible, accessible way.

Start small: create an inventory of your AI tools and conduct a basic privacy impact assessment for your main use case. Taking incremental steps now is far more effective than waiting for an ideal framework that might never materialise.

FAQs

How can SMEs start ensuring their AI systems comply with data privacy regulations?

To kick off AI governance aimed at ensuring data privacy compliance, small and medium-sized enterprises (SMEs) should begin with an AI discovery workshop. This step helps pinpoint how AI is utilised across the organisation and provides a clear overview of data processing activities. Following this, it's essential to set up well-defined data privacy policies and introduce controls that align with UK regulations, such as the GDPR.

Leadership involvement is key to success. Tools like GuidanceAI can assist decision-makers by embedding accountability into workflows and encouraging best practices, making compliance a core part of your business operations.

How can SMEs manage the risks of unauthorised AI use within their organisation?

To tackle the risks associated with unauthorised AI use, SMEs should begin by creating clear policies that define acceptable AI practices. It's crucial that every employee understands these guidelines. Hosting regular training sessions can educate staff on the risks of shadow AI and highlight the importance of adhering to data privacy laws.

On top of that, using tools such as AI-driven leadership guidance platforms can offer valuable oversight. These platforms can track AI usage, ensure it aligns with the organisation’s objectives, and help maintain compliance with data protection laws. This approach not only mitigates risks but also enhances operational efficiency.

What should a Data Protection Impact Assessment (DPIA) for AI systems cover?

When it comes to AI systems, a Data Protection Impact Assessment (DPIA) is essential for safeguarding personal data and ensuring compliance with data protection laws. Here’s what it should cover:

Mapping data flows: This involves tracing how personal data is collected, processed, and stored to gain a clear picture of its journey.

Identifying data types and purposes: Pinpoint the specific types of personal data being handled and clarify why this data is being processed.

Evaluating privacy risks: Assess potential threats to individuals' privacy and their rights, ensuring no stone is left unturned.

Implementing risk-reduction measures: Define actionable steps to minimise risks and maintain compliance with regulations like the UK GDPR.

Setting up ongoing monitoring: Establish regular review processes to ensure the system remains compliant over time.

By carrying out a thorough DPIA, organisations can address privacy concerns early, building trust and demonstrating transparency to stakeholders.